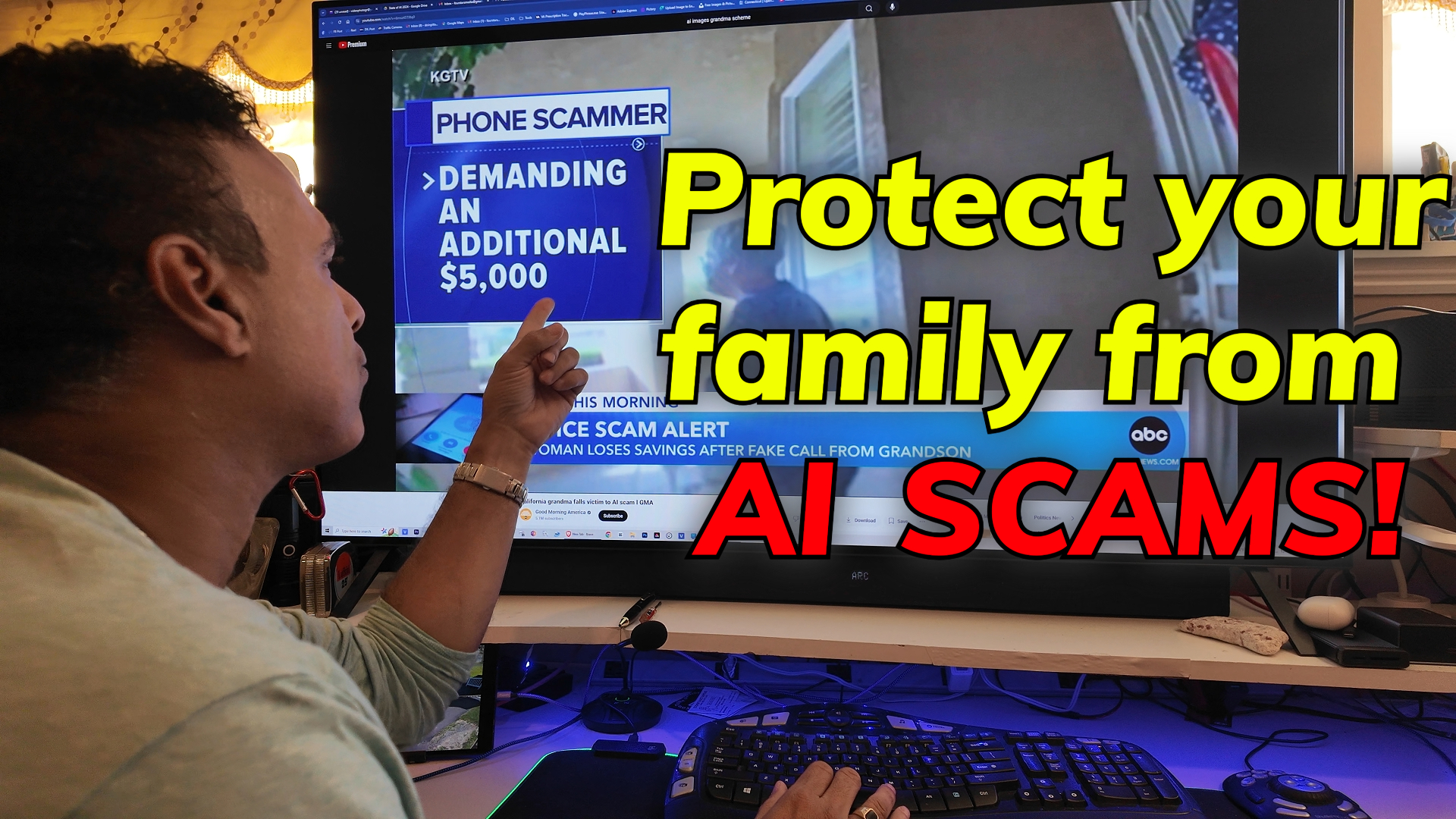

Scammers are increasingly using artificial intelligence (AI) to replicate the voices of grandchildren in order to trick grandparents into handing over large sums of money. These scams, known as “grandma scams,” have become more sophisticated and convincing with the help of AI technology.

In a recent incident reported by ABC News, an 89-year-old woman named Shirley was targeted by scammers who used AI to create a voice that sounded exactly like her grandson’s. The scammers told Shirley that her grandson had been involved in a car accident and needed money to avoid being arrested. Shirley believed the scam and handed over $9,000 in cash.

Experts warn that these types of scams are becoming more common and difficult to detect. Scammers can easily obtain a few seconds of someone’s voice from social media and use AI to create a realistic-sounding voice that can be used in a phone call or other communication.

To protect themselves from these scams, experts recommend that people have a password or passphrase that only they and their grandchildren know. If a grandparent receives a call from someone claiming to be their grandchild and asking for money, they should ask for the password. If the caller cannot provide the password, it is likely a scam.

It is also important to have regular conversations with grandchildren and keep up-to-date on their lives. This will make it easier to detect any inconsistencies in the scammer’s story.

If you or someone you know has been the victim of a scam, it is important to report it to the police. You can also report scams to the Federal Trade Commission (FTC).